Let's break down Quaily's technical architecture today. First, let's establish a few principles:

- No vendor lock-in - including not being locked into Cloudflare, ability to migrate to Linux machines at any time.

- Minimize dependencies whenever possible.

- Avoid learning new technologies unless necessary.

- No over-engineering if requirements are met.

- Only consider performance optimization where it's most needed.

That's it. Let's begin.

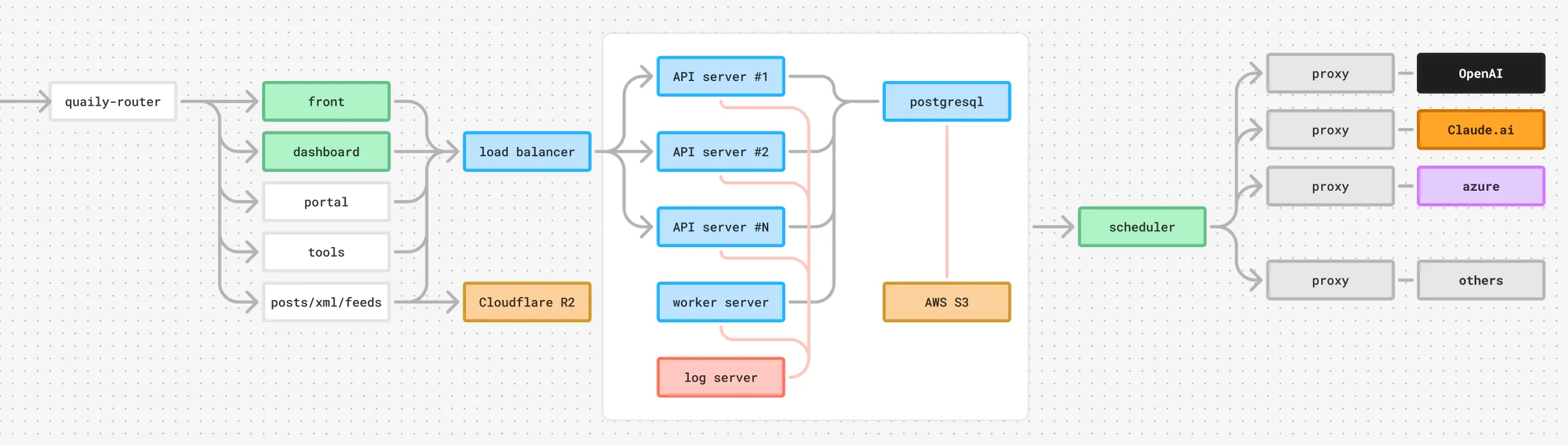

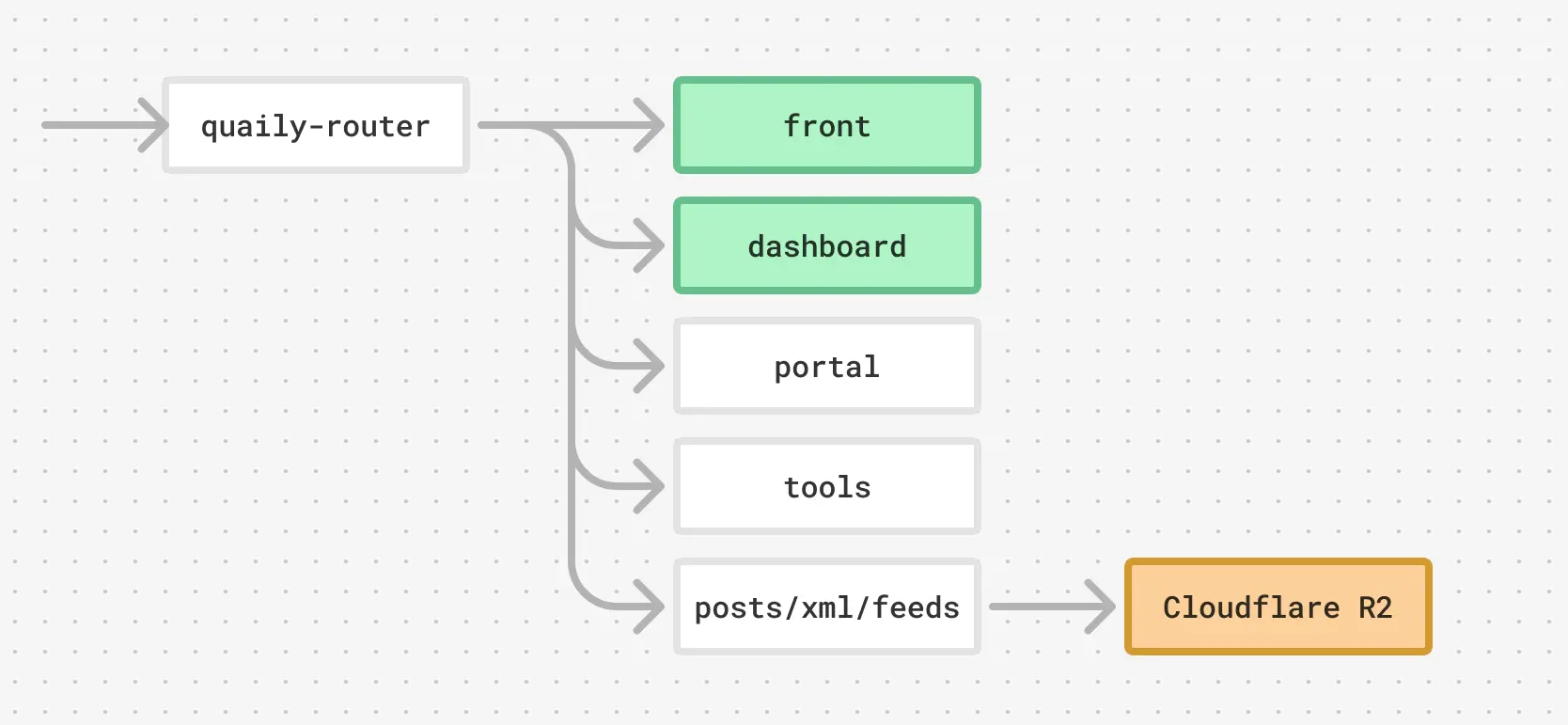

Routing

Opening quaily.com takes you to Quaily's homepage. This is a Hugo site deployed on Cloudflare.

However, the domain quaily.com doesn't point directly to the Hugo-deployed worker, but rather to a worker responsible for traffic distribution - let's call it quaily-router.

This router directs traffic to several workers based on request paths and other conditions:

routes = ["quaily.com/*"]

services = [

{ binding = "front", service = "front" },

{ binding = "dashboard", service = "dashboard" },

{ binding = "portal", service = "portal" },

{ binding = "tools", service = "tools" },

]

Among these, front and dashboard are Vue SPAs, portal is the homepage, and tools is a hand-written static site for Tools.

Additionally, quaily-router handles all static resource requests, including:

- Article page requests, like

https://quaily.com/lyric_na/p/breaking-down-quailys-technical-architecture-a-no-frills-approach - Article list page requests, like

https://quaily.com/lyric_na - Sitemap and feed requests, like

https://quaily.com/lyric_na/sitemap_index.xmlandhttps://quaily.com/lyric_na/feed/atom

These static resources are stored in Cloudflare R2.

So a complete request to the main domain quaily.com flows like this:

Although

quaily-routeris implemented using cloudflare worker, it could be replaced with traditional methods like nginx if needed; R2 is S3-compatible. So there's no Cloudflare lock-in

Frontend

Dashboard and front are simple Vue SPAs.

Specifically:

- Dashboard operates at

quaily.com/dashboard, handling all UI business after login. - Front handles all pages except articles and article lists, like

quaily.com/lyric/about, etc.

As shown in the diagram above, articles and article lists aren't SPAs or backend-rendered results, but rather static HTML pages.

The benefit of this approach is minimal latency from request to content display - quaily-router just needs to read from R2 and return the content. Average latency is under 100ms, 3-5 times faster than typical content sites.

Dynamic content on articles and article lists (like subscription forms) is implemented by mounting Vue SPAs onto the HTML. While these contents take extra time to load, they don't affect humans or search engines reading the article content and lists before loading completes.

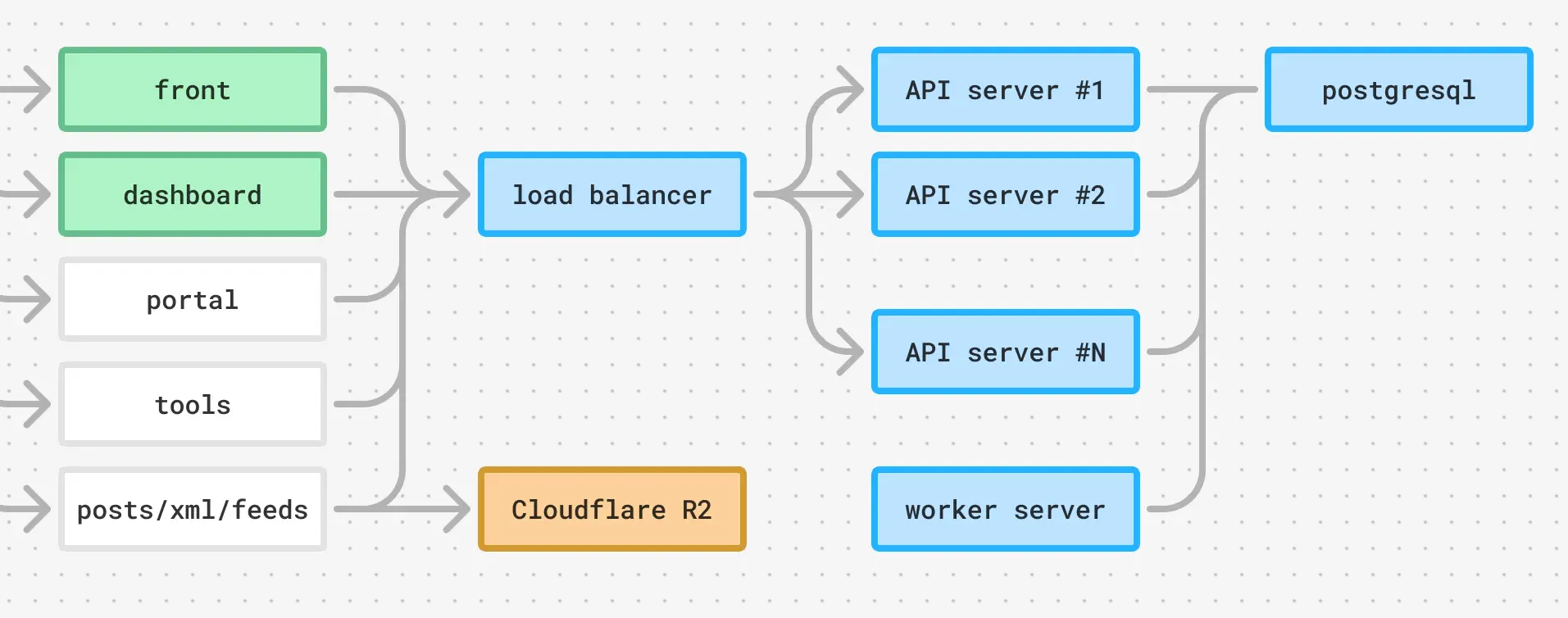

Backend

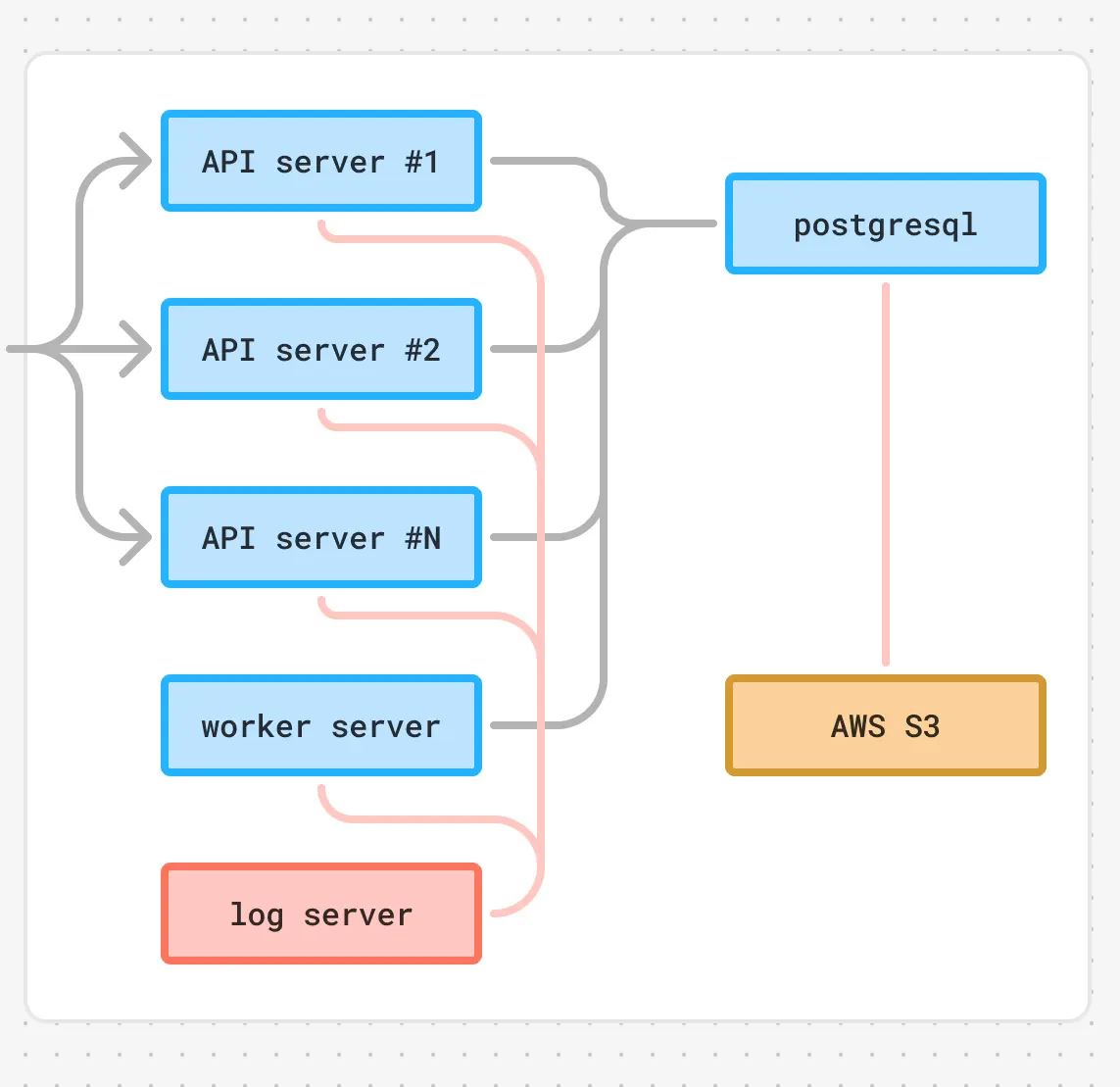

The frontend interacts with the backend through RESTful APIs, with endpoints at api.quail.ink pointing to a load balancer. The load balancer uses AWS's default configuration but can be switched to nginx anytime without lock-in.

Behind the load balancer are multiple instances providing API services, plus one worker instance handling background tasks. They're actually all the same program written in Go, connecting to the same PostgreSQL database instance.

The complete frontend-backend architecture looks like this:

Operations

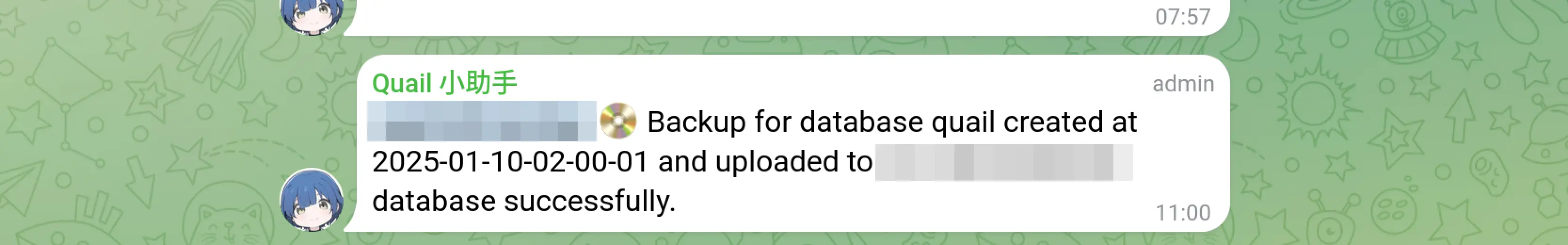

Database Backup

Accomplished through a cron script, backing up daily to encrypted S3 and sending notifications to a Telegram channel.

Many business notifications are sent to Telegram

Log Collection

All Quaily service processes run through systemd, so logs are just syslog. The simplest configuration is to set up rsyslog and send logs to a centralized log server, stored under /var/log/hosts/HOSTNAME.

For business instances, configure rsyslog.conf:

$PreserveFQDN on

*.* @@LOG_SERVER_ADDR:514

$ActionQueueFileName queue

$ActionQueueMaxDiskSpace 1g

$ActionQueueSaveOnShutdown on

$ActionQueueType LinkedList

$ActionResumeRetryCount -1

For log instances, configure rsyslog.conf:

module(load="imtcp")

input(type="imtcp" port="514")

$AllowedSender TCP, 127.0.0.1, 172.26.0.0/24

template(name="PerHostLog" type="string" string="/var/log/hosts/%HOSTNAME%/%PROGRAMNAME%.log")

*.* action(type="omfile" DynaFile="PerHostLog")

When needing to observe real-time logs, merge them together:

multitail -cS slog -f /var/log/hosts/quail-0/quail.log -cS slog -I /var/log/hosts/quail-1/quail.log ...

Scaling

Since I neither know nor want to learn k8s-like knowledge, I wrote a script that can configure everything on a fresh Linux instance with a single run, including systemd, rsyslog, etc.

When batch operations are needed, I use zellij's sync mode (ctrl + t, s), which can input content to all panes under one tab simultaneously.

Monitoring

Monitoring uses uptimerobot and sentry.

Business alerts use Telegram.

Building

Most builds are completed through GitHub Actions. A few are done on the packaging machine and uploaded to instances through scripts, like this:

#! /bin/bash

set -e

declare -a arr=(

"inst-0"

"inst-1"

"inst-2"

# ...

)

echo "📦 build..."

VER=$(git describe --tags --abbrev=0)

GOOS=linux GOARCH=amd64 CGO_ENABLED=0 go build -o quail -ldflags="-X main.Version=$VER -X ..."

for host in "${arr[@]}"

do

echo "📤 scp to $host"

scp quail $host:/opt/quail/quail-new

echo "🚀 restart..."

ssh $host "cd /opt/quail && mv quail-new quail && sudo systemctl restart quail.service"

echo "🙆 deployed!"

done

So the backend plus operations looks like this:

AI

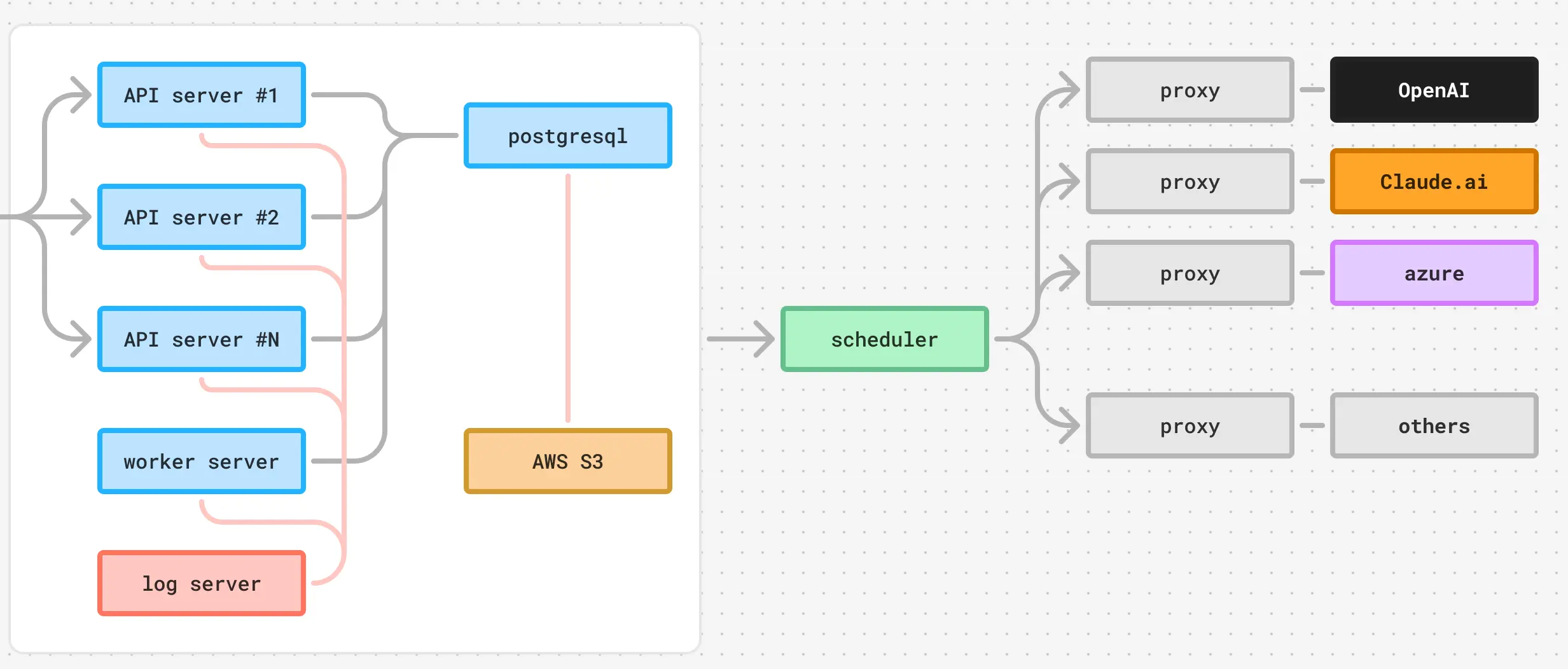

Quaily uses some AI services, including OpenAI and Claude.ai. Since handling AI-related business often involves complicated matters like retries, load balancing, formatting, task routing, CoT, etc., this part of the work is handled by a separate service set, consisting of a scheduler and several proxies, where:

- The scheduler is responsible for scheduling AI tasks. For example, which proxy handles which task, or rescheduling when a proxy returns 429

- Proxies are responsible for handling tasks, like one proxy specifically handling small tasks can use 4o-mini; another proxy responsible for text tasks can use claude-ai-3-5-sonnet-v2.

It looks something like this:

Above is Quaily's technical architecture. The complete diagram is as follows: